- Do we need to install apache spark how to#

- Do we need to install apache spark update#

- Do we need to install apache spark download#

Apache Spark can be installed in standalone mode, YARNĪnd Mesos mode in the production deployment. Over a number of nodes for distributed and large scale processing of data. For production it is deployed in clustered mode This installation is in standalone modeĪnd runs only on one machine. Ubuntu 18.04 for development purposes only. In this section we are going to install Apache Spark on Installing Apache Spark latest version is the first step towards the learning

Do we need to install apache spark how to#

How to Install Spark on Ubuntu 18.04 and test? In this section we will learn to Install Spark on Ubuntu 18.04 and then use pyspark shell to test installation. In this article, I attempted to make the process as understandable as I can.In this section we will learn to Install Spark on Ubuntu 18.04 and then use pyspark shell to test installation. I hope from this article you learn the way to install and configure apache spark on ubuntu. $ start-workers.sh spark://Linuxways.localdomain:7077Īlso, you can use spark-shell by executing the following command. To execute the following command to run the worker services. For my current host my spark master url is spark://Linuxways.localdomain:7077 so you need to execute the command in the following way to start the worker process. When you open master page in browser then you can see spark master spark://HOST:PORT URL which is used to connect the worker services through this host. When you start the worker service you will find new node listed just like in the following example. You may not find any worker processor running by starting only master service. You may encounter the following type of user interface when you browse the URL.

If you browse the localhost on port 8080 which the default port of spark.

$ start-master.shĪs you can see our spark master service is running on port 8080. Now, we have set up everything we can execute the master service as well as worker service using the following command. $ source ~/.profile Deploying Apache Spark Now, run the following command to apply the new environment variable changes. profile file using echo with > operation. $ echo "export SPARK_HOME=/opt/spark" > ~/.profile $ echo "export PATH=$PATH:/opt/spark/bin:/opt/spark/sbin" > ~/.profile $ echo "export PYSPARK_PYTHON=/usr/bin/python3" > ~/.profileĪs you can see the path variable is appended to at the bottom of. For an easier way execute the following echo command. profile in the file needed to set up in order for the command to work without a complete path, you can do so either using the echo command or do it manually using a preferable text editor. $ sudo mv spark-3.1.2-bin-hadoop3.2 /opt/spark Setting Up Environment Variables Lastly, move the extracted spark files to /opt directory. Now, extract the downloaded binary file using the following tar command.

Do we need to install apache spark download#

Use the following wget command and link to download the binary file. There is no official apt repository to install apache-spark but you can pre-compiled binary from the official site. Now, check the version to verify the installation.

Do we need to install apache spark update#

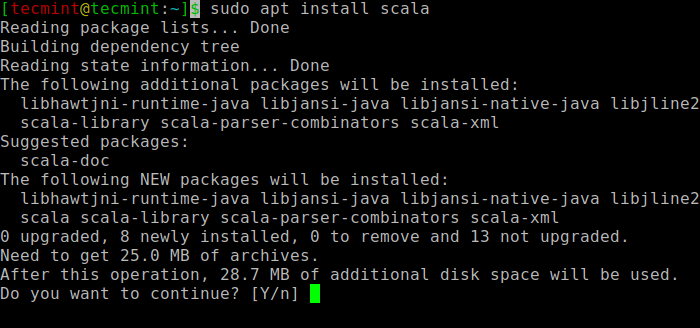

$ sudo apt update $ sudo apt install scala Execute the following apt command to install scala. Scala is compatible with both javascript runtime as well as JVM granting you easy access to the large libraries ecosystem which helps in building high performance system. $ java -versionĪs for Scala, scala is a object oriented and functional programming language that combines it into single concise. If you need to verify the java installation you can execute the following command. $ sudo apt update $ sudo apt install openjdk-8-jdk If you haven’t installed Java and Scala you can follow up the following process to install it.įor Java, we will be installing open JDK 8 or you can install your preferable version. Before installing Apache Spark you have to install Scala as well as scala on your system. To demonstrate flow in this article I have used the Ubuntu 20.04 LTS version system. In this article, you will get to know the way to install and configure Apache Spark on ubuntu. It provides high-level tools for spark streaming, GraphX for graph processing, SQL, MLLib. It supports various preferred languages such as scala, R, Python, and Java. Apache Spark is an open-source computational framework for high scale analytical data and machine learning processing.

0 kommentar(er)

0 kommentar(er)